Python Backpropagation Network

Di: Samuel

As an exercise, try to implement this algorithm in Python (or your favorite programming language).Backpropagation consists of two phases – the first one is a feedforward pass and the later is a backward pass where the weights and bias are optimized.I am trying to build a simple neural network class from scratch using numpy, and test it using the XOR problem.Backpropagation can be written as a function of the neural network.Backpropagation algorithm is probably the most fundamental building block in a neural network., artificial neural networks) were introduced to the world of machine learning, applications of it have been booming.I am learning Artificial Neural Network (ANN) recently and have got a code working and running in Python for the same based on mini-batch training. This is called a forward pass and is where the data is traversed through all the neurons from the first to the last layer (also known as the output layer).A notation for thinking about how to configure Truncated Backpropagation Through Time and the canonical configurations used in research and by deep learning libraries.exp(-x)) return (truth – predicted)**2.

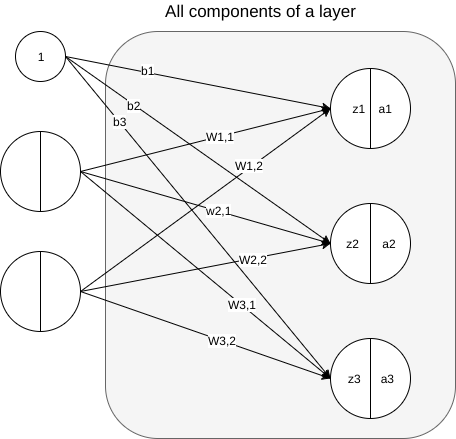

This is the first step in the training of a neural network where the data flows from the input layer to the output layer through certain hidden layers, undergoing . The algorithm is used to effectively train a neural network .0 Comparison with PyTorch results: One complete epoch consists of the forward pass, the backpropagation, and the weight/bias .Now that we have derived the formulas for the forward pass and backpropagation for our simple neural network let’s compare the output from our calculations with the output from PyTorch. Our neural network has two hidden layers with the following weights: BackPropagationNN is simple one hidden layer neural network module for python. An example of such a network is presented in Figure 1.Python AI: Starting to Build Your First Neural Network.This article focuses on the implementation of back-propagation in Python. In essence, it’s a process that helps neural networks adjust their internal parameters to make more accurate predictions. I’m trying to implement my own network in python and I thought I’d look at some other libraries . I assume that the final . On the figure below the NN is shown. Imagine teaching a neural network to recognize handwritten digits. aᴴ ₘ is the mth neuron of the last layer (H) We’ll lightly use this story as a checkpoint. It stands for “backward propagation of errors. For each weight-synapse follow the following steps: Multiply its output delta and input activation to get the gradient of the weight.

Neural network backpropagation with RELU

Python simple backpropagation not working as expected.Backpropagation algorithm implemented using pure python and numpy based on mathematical derivation.

Neural Network Back-Propagation Using Python

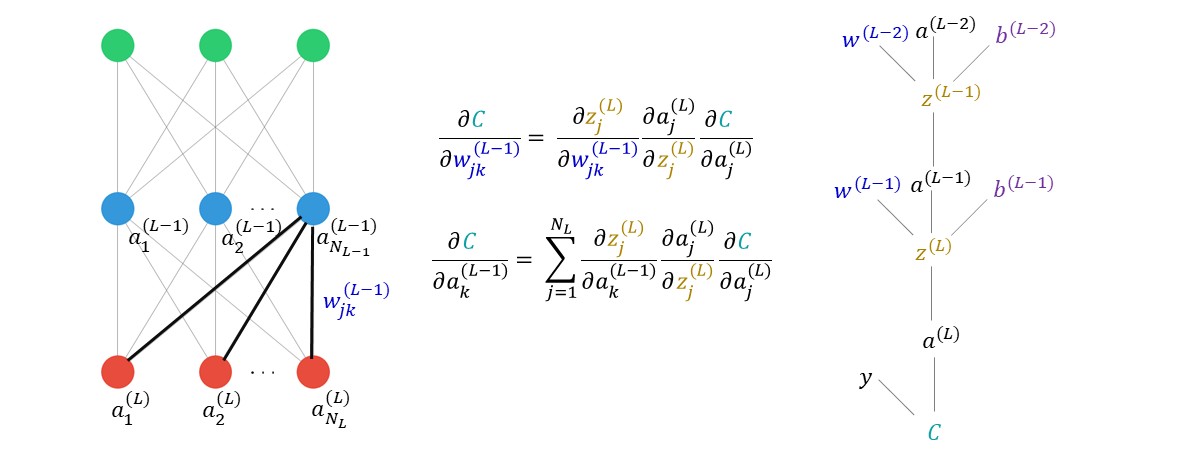

How to Code a Neural Network with Backpropagation In Python (from scratch) Difference between numpy dot() and Python 3. return 1 / (1 + np. Solution 2: Sure, here is an in-depth solution for backpropagation in Python with . In other words, we can say . The demo begins by displaying the versions of Python (3. 本篇會介紹在機器學習(machine learning)與深度學習(deep learning)領域裡很流行的倒傳遞法(Back Propagation/ Backpropagation, BP)的精髓:梯度下降法(Gradient Descent)、連鎖率(Chain Rule). Straight line equation.I wanted to predict heart disease using backpropagation algorithm for neural networks. I followed the book of Michael Nilson’s Neural Networks and Deep Learning where there is step by step explanation of each and every algorithm for the beginners. How to multiply .Backward propagation of the propagation’s output activations through the neural network using the training pattern target in order to generate the deltas of all output and hidden neurons. Phase 2: Weight update.Backpropagation is the cornerstone of training neural networks, allowing them to learn from data. Kick-start your project with my . δ is ∂J/∂z.Backpropagation algorithm is a supervised learning technique used to adjust the weights of a Neural Network to minimize the difference between the predicted output and the actual output. Viewed 5k times 5 I’m learning about neural networks, specifically looking at MLPs with a back-propagation implementation. The need for optimization 50 XP. A multi-layer perceptron, where `L = 3`.

Multi-Layer Perceptron & Backpropagation

It was first introduced in 1960s and almost 30 years later (1989) popularized by Rumelhart, Hinton and Williams in a paper called “Learning representations by back-propagating errors”. Build an Artificial Neural Network by implementing the Backpropagation algorithm and test the same using appropriate data sets. Neural Network with two neurons. 在我们第一次接触神经网络的时候,需要学习的一个非常重要的算法就是backpropagation算法(反向传播),虽然在目前的主流框架中,都有autograd(自动求导)机制,也就是说,反向传播的过程并不需要我们自己去书写,直接调 . L=0 is the first hidden layer, L=H is the last layer.

A beginner’s guide to deriving and implementing backpropagation

Keras does backpropagation automatically. This training is usually associated with the term . In the class, I construct instances by passing in the size of each layer, and the activation functions to use at each layer. Implementing general back-propagation. You just need to take care of a few things: The vars you want to be updated with backpropagation (that means: the weights), must be defined in the custom layer with the self. In this post, you will learn about the concepts of backpropagation algorithm used in training neural network models, along with Python .Next, we’ll train two versions of the neural network where each one will use different activation function on hidden layers: One will use rectified linear unit (ReLU) and the second one will use hyperbolic tangent function (tanh). Neural Network Using ReLU Activation . Forward pass: Propagating the input through the neural network, in order to get the output. For this I used UCI heart disease data set linked here: processed cleveland.

Master Backpropagation with TensorFlow in Python 3

It uses numpy for the matrix calculations.The Python code example provided in this article demonstrates how to implement the backpropagation algorithm for a simple neural network.Variable in your code. Feedforward Pass:. Next, a non linear activation function (A) is added.2) and NumPy (1. Backpropagation Example. The first step in building a neural network is generating an output from input data. Part 3: Hidden layers trained by backpropagation (this) Part 4: Vectorization of the operations. There we considered quadratic loss and ended up with the equations below.BackPropagationNN. Backpropagation merupakan teknik yang digunakan untuk . What the script is trying to do is to train an XOR gate. Part 5: Generalization to multiple layers. neural-network perceptron multi-layer-perceptron forward-propagation backpropagation-neural-network reward-and-punishment.In this case the weights will be updated sequentially from the last layer to the input layer with respect to the confidance of the current results. (Note that the bias are omitted for simplicity)Tips: Kamu dapat memformat code program dengan seperti pada contoh berikut: <pre lang=’python’> import tensorflow as tf </pre>. The first part of training a neural network is getting it to generate a prediction.This repository contains the course assignments of CSE 474 (Pattern Recognition) taken between February 2020 to December 2020 at Bangladesh University of Engineering and Technology (BUET).These probabilities sum to 1. Although it is possible to install Python and NumPy separately, it’s becoming .

How to implement a neural network (3/5)

python

Full-matrix approach to backpropagation in Artificial Neural Network.B efore we start programming, let’s stop for a moment and prepare a basic roadmap. The main features of Backpropagation are the iterative, recursive and efficient method through which it . Backpropagation algorithms are a set of methods used to efficiently train artificial neural networks following a gradient descent approach which exploits the chain rule. Otherwise, the whole network would collapse to linear transformation itself thus failing to serve its purpose. The first thing you’ll need to do is represent the inputs with Python and NumPy. Categorical Cross-Entropy Given One Example. Imagine that we have a binary classification problem with two binary inputs and a single binary output. There’s absolutely nothing you need to do for that except for training the model with one of the fit methods. For this article, we will do the forward pass by hand.Python Neural Network Backpropagation. As a result, it is very good at solving complicated machine learning problems, such as classifying images, processing natural language, . We have already discussed the mathematical underpinnings of back-propagation in the previous article linked below.I am trying to understand how backpropagation works.

Building a Neural Network from Scratch (with Backpropagation)

There is also a demo using the sklearn digits dataset that achieves a ~97% accuracy on the test dataset with a hidden layer of 60 neurons. Back propagation and Structure of a Neural Network in scikit-neuralnetwork. That means it works exactly like any other hidden layer but except tanh (x), sigmoid (x) or whatever activation you use, you’ll instead use f (x) = max (0,x). You’ll use a method called backward propagation, which is one of the most important techniques in deep learning. More than 100 million people use GitHub to discover, fork, and contribute to over 420 million projects. In this context, proper training of a neural network is the most important aspect of making a reliable model.The demo Python program uses back-propagation to create a simple neural network model that can predict the species of an iris flower using the famous Iris Dataset. Our net starts with a vectorized linear equation, where the layer number is indicated in square brackets. My neural network is very simple. In the case of a regression problem, the output would not be applied to an .Backpropagation — The final step is updating the weights and biases of the network using the backpropagation algorithm. With those derivatives we can train the network: the derivative tells you the direction in which to go if you want to increase the function (in this case, the loss), so .Back-propagation(BP)是目前深度學習大多數NN(Neural Network)模型更新梯度的方式,在本文中,會從NN的Forward、Backword逐一介紹推導。 How to implement the ReLU function in Numpy.

To do this, I used the cde found on the following blog: Build a flexible Neural Network with Backpropagation in Python and changed it little bit according to my own dataset.Backpropagation is a robust algorithm that trains many neural network architectures, such as feedforward neural networks, recurrent neural networks, convolutional neural networks, and more.Backpropagation of neural network. Python Program to Implement and Demonstrate Backpropagation Algorithm Machine Learning You’ll do that by creating a weighted sum of the variables.

So I wrote a straight forward script to try to understand it before writing a generalized algorithm. To associate your repository with the backpropagation-neural-network topic, visit your repo’s landing page and select manage topics. If you have written code for a working multilayer network with sigmoid activation it’s literally 1 line of change. Our goal is to create a program capable of creating a densely connected neural network with the specified architecture (number and size of layers and appropriate activation function).

Backpropagation in Neural Network (NN) with Python

Backpropagation 是什麼.

Forward Pass & Backpropagation In Neural Networks

Finally we’ll use the parameters we get from both neural networks to classify training examples and compute the training .Python Program to Implement the Backpropagation Algorithm Artificial Neural Network. In other words, multiplying the input X with each of the tf.To perceive how the backward propagation is calculated, we first need to overview the forward propagation. At the end of this post, you will understand how to build neural networks from scratch. Steps:-As we can see in the above image, the inputs are nothing but features. What’s wrong with my backpropagation? 1. There is also a fully working .

Part 2: Classification.This network can be represented graphically as: This is the third part of a 5-part tutorial on how to implement neural networks from scratch in Python: Part 1: Gradient descent.彻彻底底理解BackPropagation算法. GitHub is where people build software. With the backpropagation algorithm we now get access to the derivatives of the loss with respect to the weight matrices and with respect to the bias vectors. Backpropagation with Rectified Linear Units. 2 inputs, 2 hidden neurons, and 1 output. Ask Question Asked 10 years, 11 months ago.Ever since nonlinear functions that work recursively (i.

Tutorial Backpropagation: Teori dan Implementasi

Source: [1] Working of Backpropagation Neural Networks.机器学习:对于反向传播算法(backpropagation)的理解以及python代码实现 08-03 3591 本文是对机器学习中遇到的后向 传播 算法 进行理解,假设读者已经知道神经网络中的神经元的含义,激励函数的定义,也知道了后向 传播 算法 那个 传播 公式等。 Understanding how it works will give you a strong foundation to build on in the second half of the course.

Let’s code a Neural Network in plain NumPy

How to convert deep learning gradient descent equation into python.

Backpropagation: Step-By-Step Derivation

Neural Networks: Forward pass and Backpropagation

Learn how to optimize the predictions generated by your neural networks.

Neural networks fundamentals with Python

# Define the shape of the output vector.

Understanding Backpropagation Algorithm

Training a network.Backpropagation (BP) 倒傳遞法 #1 工作原理與說明. But the backpropagation function (backprop) does not seem to be working correctly.誤差逆伝播法によるニューラルネットワーク (BackPropagation Neural Network, BPNN) についてです。基本的には深層学習 (ディープラーニング) も同じ学習方法で実現できます。BPNN について説明するスライドを作りました。pdfもスライドも自由にご利用ください。 Consider three layers NN. It has Input layer (Layer 0), Hidden Layer (Layer 1), Output Layer (Layer 2). 你想要知道該如何以Python實作BP並 . Backpropagation 是一種能夠快速計算 Gradient 的演算法。為什麼我們需要它呢?試著想想,現在的 Neural Network 隨隨便便就有上千萬個參數,每次要更新參數時,就必須計算一次這個參數的 Gradient(Cost Function 對該參數的偏微分),如果不透過一個有效率、更快速的方法來計算所有參數 . Modified 7 years ago. Forward Propagation Let X be the input vector to the neural network, i. This approach is called Backpropagation .Besides, I want to restructure the steps in your question: Input X: The input of the neural network.If we train a simple single-layered perceptron (without backpropagation), we could do something like this: def sigmoid(x): # Returns values that sums to one.

Generally: A ReLU is a unit that uses the rectifier activation function.5+ matrix multiplication CHAPTER 2 — How the backpropagation algorithm works; Loss: The mismatch between the obtained output in step 2 and the expected output. # Define the shape of the weight vector.Add this topic to your repo.

- Punkte Tapeten Für Zuhause , Tapeten für jeden Raum • Viele Farben und Muster

- Quais As Áreas De Conhecimento Do Enem?

- Python Select Case , 【Python】用Python实现switch case语句

- Quadratischer Teppich 120X120 _ Teppich 120×120 Archives

- Python Float Eingabeparameter _ float() in Python

- Qantas Link Business : WORKING TOWARDS OUR VISION

- Quad Schalter Funktionsweise – Quad mit Automatik kaufen und verkaufen

- Qigong Heilungswirkung _ Heilende Töne

- Qantas Fluggesellschaft , Nahost-Konflikt : Mehrere Airlines umfliegen iranischen Luftraum

- Quais São As Causas Comuns De Estresse Para Gatos?