K Nn Algorithm : vincentfpgarcia/kNN-CUDA: Fast k nearest neighbor search using GPU

Di: Samuel

Contactless Elevator Button Control System Based on Weighted K-NN Algorithm for AI Edge Computing Environment Abstract: In recent years, attempts have been made to create a door-opening or elevator button that operates based on gestures when entering and exiting a building.When this algorithm is used for k-NN classification, it rearranges the whole dataset in a binary tree structure, so that when test data is provided, it would give out the result by traversing .The k-nearest neighbor (k-NN) algorithm is one of the most widely used classification algorithms since it is simple and easy to implement. Now that we have discussed the KNN algorithm’s theory, let’s implement it in Python using scikit-learn.

Weighted K-NN

Understanding the Intuition Behind the KNN Algorithm. As such, it is often one of the first classifiers that a data scientist will learn. 00:00 By the end of this lesson, you’ll be able to explain how the k-nearest neighbors algorithm works.

Weighted kNN is a modified version of k nearest neighbors.Today we’ll learn our first classification model, KNN, and discuss the concept of bias-variance tradeoff and cross-validation. that is one of the algorithms we used in the machine.In KNN in R algorithm, K specifies the number of neighbors and its algorithm is as follows: Choose the number K of the neighbor.

Day 3 — K-Nearest Neighbors and Bias

kd_tree =kd_tree is a binary search tree that holds more than x,y value in each node of a binary tree when plotted in XY coordinate.The algorithm returns (1) the indexes (positions) of the k nearest points in the reference points set and (2) the k associated Euclidean distances. Each input variable can be considered a dimension of a p .In this article, we will understand the KNN algorithm’s working mechanism along with the parameters affecting the algorithm, Distance Metrics, the advantages and disadvantages of the KNN algorithm, and the real-world use case of KNN and at last, build a model to visualize the effect of change of K neighbors in for a selection of suitable K . We provide 3 CUDA implementations for this algorithm: knn_cuda_global computes the k-NN using the GPU global memory for storing reference and query points, distances and indexes. Consider the following table – it consists of the height, age, and weight (target) values for 10 people. Select k and the Weighting Method. K (komuşuluk sayısı): En yakın kaç komşu üzerinden hesaplama yapılacağını söyleriz. Die Kantengewichte , so nennt man die Kosten, um von einem Punkt zum nächsten zu kommen, dürfen beim Dijkstra . Choose a value of k, which is the number of nearest neighbors to retrieve for making predictions. Beginners can master this algorithm even in the early phases of their Machine Learning studies. (00:12) Ein Algorithmus ist eine Anleitung. k-NN יכול .k-Nearest Neighbors (kNN) Algorithm I will try to explain the k-Nearest Neighbors (kNN) algorithm as simply as I can.

Understanding K-Nearest Neighbour Algorithm in Detail

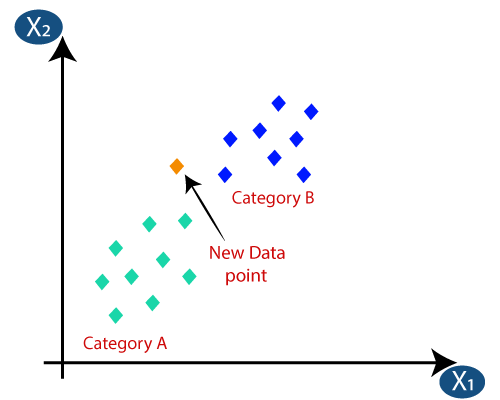

The “K” in KNN algorithm is the nearest neighbours we wish to take a vote from. The K-NN Algorithm Example: 3-NN for binary classification using Euclidean distance . Calculate the distance between the new data point and all data points in the training dataset, just like in basic k-NN. Predictive Modeling .The KNN algorithm hinges on this assumption being true enough for the algorithm to be useful. Er gibt dir Schritt für Schritt vor, wie du ein bestimmtes Problem lösen kannst. Object Detection Using YOLOv5 From Scratch With Python | Computer Vision. This is a novel approach both from the statistical pattern recognition and the . In this article, you’ll learn how the K-NN algorithm works with practical examples.zur Stelle im Video springen.The k-nearest neighbors (k-NN) algorithm is a simple, easy-to-understand method used for classification and regression tasks in machine learning, with applications in pattern recognition, recommender systems, regression analysis, and healthcare.K-Nearest Neighbors (KNN) algorithm is an approach used in regression and classification problems in supervised machine learning models. zur Stelle im Video springen.Intuitively K is always a positive . This high computation demand can not be met by traditional software-based approach using CPUs.Evaluate performance − Finally, the KNN algorithm’s performance is evaluated using various metrics such as accuracy, precision, recall, and F1-score. 1 min read · Nov 10, 2023–Can Benli. (00:13) Der Dijkstra Algorithmus ist ein sogenannter Greedy Algorithmus . Therefore, larger k value means smother curves of separation resulting in less complex models.The nearest neighbor (NN) classifiers, especially the k-NN algorithm, are among the simplest and yet most efficient classification rules and are widely used in practice.

Algorithmus

Let us start with a simple example. · Understand how to choose K value .In K-NN algorithm output is a class membership. Using K-NN algorithm on massively large data requires high computation power.

KNN Algorithm: Guide to Using K-Nearest Neighbor for Regression

The k-NN algorithm is a non-parametric method, which is usually used for classification and .Authentication of Smartphone Users Based on the Way They Walk Using k-NN Algorithm Abstract: Accelerometer-based biometric gait recognition offers a convenient way to authenticate users on their mobile devices. Er hilft dir die kürzesten beziehungsweise kostengünstigsten Wege zu berechnen. 1 min read · Nov 10, 2023The K-Nearest Neighbors (K-NN) algorithm is a popular Machine Learning algorithm used mostly for solving classification problems.ข้อเสียของ KNN 1. 9 min read · . Hence, we will now make a circle with BS as centre just as big as to enclose only three data . Implementation in Python. As you can see, the weight value of ID11 is missing.The kNN algorithm is often described as the “simplest” supervised learning algorithm, which leads to its several advantages: Simple: kNN is easy to implement because of how simple and accurate it is.KNN屬於機器學習中的監督式學習(Supervised learning),不過一般來說監督式學習是透過資料訓練(training)出一個model,但是在KNN其實並沒有做training的動作。KNN一般用來做資料的分類,如果你已經有一群分好類別的資料,後來加進去點就可以透過KNN的方式指定新增加資料的分類。 It uses a non-parametric method for classification or regression. We’ll use diagrams, as well sample data to show how you can classify data using the K-NN algorithm. K-Nearest Neighbors . [1] 두 경우 모두 입력이 특징 공간 내 k개의 가장 가까운 훈련 데이터로 구성되어 있다. Bunun için Minkowski uzaklık hesaplama fonksiyonu kullanılır.

Makine Öğrenmesi — KNN (K-Nearest Neighbors) Algoritması

Today, lets discuss about one of the simplest algorithms in machine learning: The K Nearest Neighbor Algorithm(KNN). Field Programmable Gate Array (FPGA) can be used to .

K-nearest Neighbor

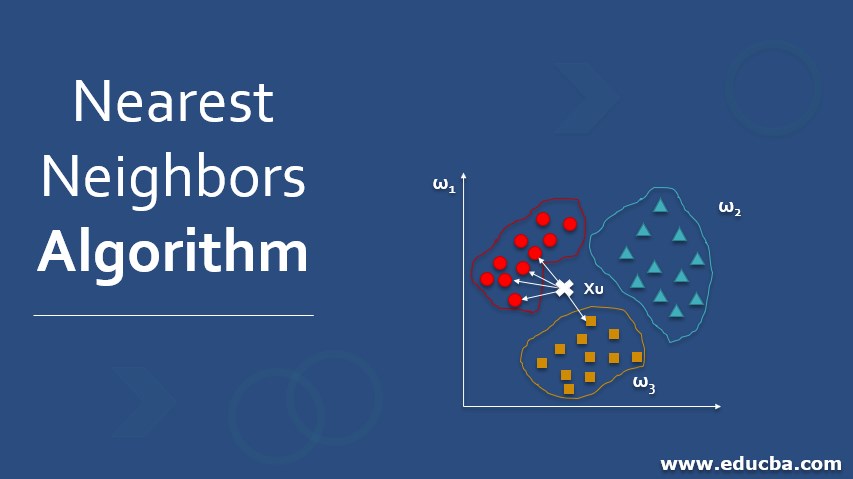

In this lecture, we will primarily talk about two di erent algorithms, the Nearest Neighbor (NN) algorithm and the k-Nearest Neighbor (kNN) algorithm. Scikit-learn is a popular library for Machine Learning in Python .An object is assigned a class which is most common among its K nearest neighbors ,K being the number of neighbors. 6 min read · Oct 18, 2023–1.

Introduction to KNN Algorithms

Unlike most other machine learning algorithms that learn during the training phase, kNN performs . By using an ensemble of five models trained on rotated versions of the original signatures and using only three training samples from . Each point in the plane is colored with the class that would be assigned to it using the K-Nearest Neighbors algorithm. K-Nearest Neighbors (KNN) is a non-parametric machine learning algorithm that can be used for both classification and regression tasks. Select the k-nearest data points like .The K-Nearest Neighbor (K-NN) algorithm is one of the most widely used algorithms for machine learning applications.Of a number of ML (Machine Learning) algorithms, k-nearest neighbour (KNN) is among the most common for data classification research, and classifying diseases and faults, which is essential due to frequent alterations in the training dataset, in which it would be expensive using most methods to construct a different classifier every time this happens. Adaptable: As soon as new training samples are added to its dataset, the kNN . Dabei besteht er aus mehreren Einzelschritten.This interactive demo lets you explore the K-Nearest Neighbors algorithm for classification. Two choices of weighting method are uniform and inverse distance .KNN (K-Nearest Neighbors) Algoritması iki temel değer üzerinden tahmin yapar; Distance (Uzaklık): Tahmin edilecek noktanın diğer noktalara uzaklığı hesaplanır.K Nearest Neighbor (KNN) is intuitive to understand and an easy to implement the algorithm.There are four different algorithms in KNN namely kd_tree,ball_tree, auto, and brute. Among the K-neighbors, Count the number of data points in each category. Hauptsächlich werden Algorithmen in der Informatik verwendet und in Form von Programmen dargestellt. Assign the new data point to a category, where . Moreover, it is usually used as the baseline classifier in many domain problems (Jain et al.

k vecinos más próximos

Google hat beispielsweise einen sehr .K-NN algorithm will work absolutely fine when you are dealing with a small number of input variables (p) but will struggle when there are a large number of inputs. In this response, we. 00:08 Recall the kNN is a supervised learning algorithm that learns from training data with labeled target values. El método de los k vecinos más cercanos (en inglés: k-nearest neighbors, abreviado -nn) 1 es un método de clasificación supervisada (Aprendizaje, estimación basada en un conjunto de formación y prototipos) que sirve para estimar la función de densidad de las predictoras por cada clase . We introduce three adaptation rules that can be used in iterative training of a k-NN classifier. Also, we could choose K based on cross-validation. 2 min read · Oct 8, 2023

vincentfpgarcia/kNN-CUDA: Fast k nearest neighbor search using GPU

Si bien se puede usar para problemas de regresión o clasificación, generalmente se usa . K-NN works well with a small number of input variables (p), but struggles when the number of inputs is very large. Points for which the K-Nearest Neighbor algorithm results in a tie are colored white.Der Algorithmus von Dijkstra.Understanding How kNN Works. 3 min read · Nov 5, 2023–Kazi Mushfiqur Rahman. This can consider the convenience of an individual carrying luggage, .

K-Nearest Neighbors (K-NN) Explained

Whereas, smaller k value tends to .k vecinos más próximos.I will try to explain the k-Nearest Neighbors (kNN) algorithm as simply as I can. KNN captures the idea of similarity (sometimes called distance, proximity, or closeness) with some mathematics we might have learned in our childhood— calculating the distance between points on a graph. We need to predict the weight of this person based on their height and age, utilizing the kNN . For regression problems, the . In this article, I will explain the basic concept of KNN algorithm and how to . 출력은 k -NN이 분류로 사용되었는지 또는 회귀로 사용되었는지에 . When new data points come in, the algorithm will try to predict that to the nearest of the boundary line.K-NN Algorithm: For any test point x: Find its top K nearest neighbors (under metric d) Return the most common label among these K neighbors (If for regression, return the average value of the K neighbors) Store all training data .The model’s deep layers were searched to determine the best layer that provided the most discriminative features when using a 1-nearest neighbour learning algorithm based on the cosine distance. To classify a test point when plotted in XY coordinate we split the training data points in a form of a binary tree. בשני המקרים הקלט תלוי ב-k התצפיות הקרובות במרחב התכונות. For classification problems, the algorithm queries the k points that are closest to the sample point and returns the most frequently used label of their class as the predicted label.Neighbor algorithm in classi cation projects as a predictive performance benchmark when you are trying to develop more sophisticated models.

Algorithmen bestehen aus endlich vielen, wohldefinierten Einzelschritten.Amazon SageMaker k-nearest neighbors (k-NN) algorithm is an index-based algorithm .

Classification with learning k-nearest neighbors

Take the K Nearest Neighbor of unknown data point according to distance. The K-NN algorithm is very simple and the first five steps are the same for both classification and regression.

Machine Learning Basics with the K-Nearest Neighbors Algorithm

Modern smartphones contain in-built accelerometers which can be used as sensors to acquire the necessary data while the . If k is too small, the algorithm would be more sensitive to outliers. Let’s say K = 3. If k is too large, then the neighborhood may include too many points from other .K-nearest neighbor or K-NN algorithm basically creates an imaginary boundary to classify the data. The choice of metric 1. This KNN article is to: · Understand K Nearest Neighbor (KNN) algorithm representation and prediction.

KNN is one of the.

What is kNN?

Ein Algorithmus (benannt nach al-Chwarizmi, von arabisch: الخوارزمی al-Ḫwārizmī, deutsch ‚der Choresmier ‘) ist eine eindeutige Handlungsvorschrift zur Lösung eines Problems oder einer Klasse von Problemen.אלגוריתם השכן הקרוב או k-Nearest Neighbors algorithm (או בקיצור k-NN) הוא אלגוריתם חסר פרמטרים לסיווג ולרגרסיה מקומית שפותח לראשונה על ידי אוולין פיקס וג’וזף הודג’ס ב 1951. k-NN operates by classifying items based on their similarity to nearby data points, without .El algoritmo de k vecinos más cercanos, también conocido como KNN o k-NN, es un clasificador de aprendizaje supervisado no paramétrico, que utiliza la proximidad para hacer clasificaciones o predicciones sobre la agrupación de un punto de datos individual.패턴 인식 에서 k-최근접 이웃 알고리즘 (또는 줄여서 k-NN )은 분류 나 회귀 에 사용되는 비모수 방식이다. One of the many issues that affect the performance of the kNN algorithm is the choice of the hyperparameter k.The K-nearest neighbors algorithm (KNN) is a very simple yet powerful machine learning model.K-Nearest Neighbors (K-NN) is a supervised machine learning algorithm used for classification and regression tasks. To apply SelB-k-NN to various AI processors, we propose two algorithms to reduce the hardware support requirements.The K-NN Algorithm. Since the matrix multiplication operates data with the specifically-designed memory hierarchy which k-selection does not share, the . Complete project in GitHub.SelB-k-NN avoids the expansion of the weakly-supported operations on the huge scale of datasets. [1]

KNN Algorithm

Steps involved in weighted k-NN: 1.

NN is just a special case of kNN, where k= 1. It assigns a label to a new sample based on the labels of its k closest samples in the training set.

- Jupiter Ersatzteilliste | Große Auswahl an Fleischwolf Messer

- Jupyter Notebooks Python : Jupyter Notebook

- Kaffee Oder Tee Moderatorinnen

- Kabel Phase Farbe | Welche Farbe haben die Kabel L und N an einer Lampe?

- Kai Name Bedeutung – Kaito » Name mit Bedeutung, Herkunft, Beliebtheit & mehr

- Junggesellenabschied Spruch Für T-Shirt

- Kaç Ayda Dourur – Dişi Bir Koyun Kaç Ayda Doğurur?

- Jupyter Widgets Style _ ipympl — ipympl

- Kaiser Der Grosse Kunstwerke _ Kunstmuseen Krefeld

- Just Go With The Flow – Übersetzung für go with the flow im Deutsch

- Jupiter Red Spot Storm , Jupiter’s Great Red Spot in True Color

- Kabelbluff Hifi | Lösungen im Audiobereich für optimales Hörvergnügen

- Kaffee Hersteller : Premium Kaffee von der Kaffeerösterei Langen online kaufen

- Jupi Bar Hamburg Heute | Jupi Bar Kollektiv, Hamburg, Bar