Is An Algorithm A Constant Time?

Di: Samuel

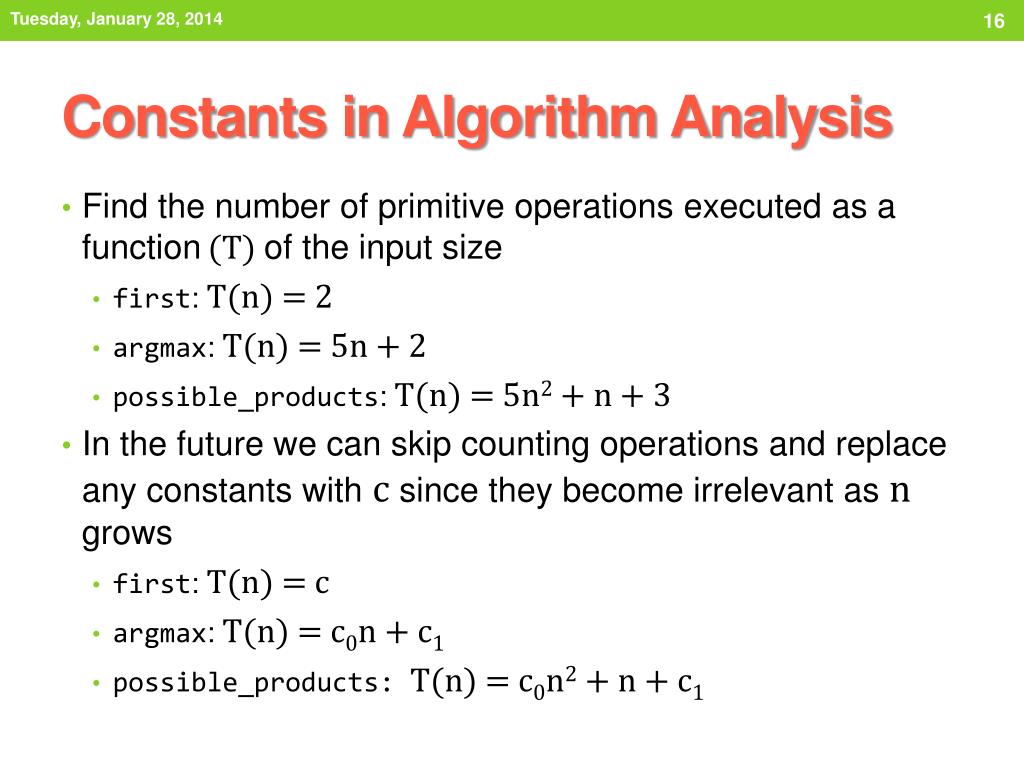

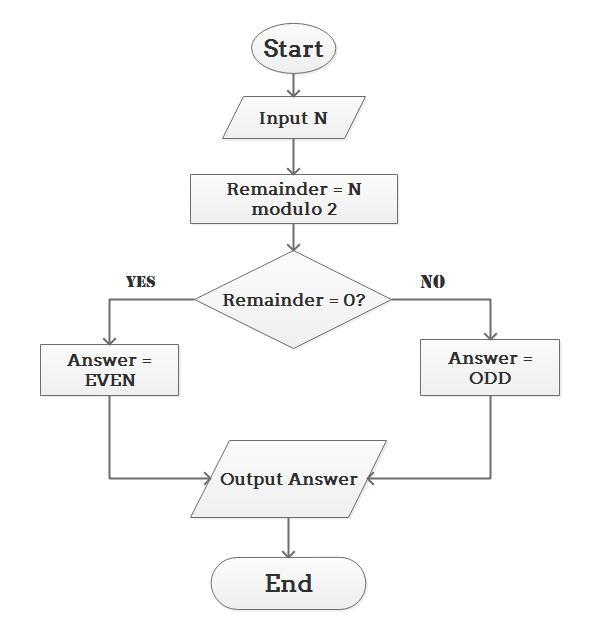

If an algorithm performs 1 operation (or any fixed number of operations) for an input size N, this is constant time, because the number of operations does not change depending on the size of the input. The probability of h(x) to be filled up with . In layman’s terms that means that no matter how much data you add the amount of time to preform a task will not change.Check this answer.The model is intended to reflect the behavior of real computers more accurately than the Turing machine model.By Alex Rosenblat. It is more than theory analysis.

In this work, we propose different techniques that can be used to implement the rank-based key encapsulation methods and public key encryption schemes of the ROLLO, and partially RQC, family of algorithms in a standalone, efficient and constant time library.We give the first non-trivial constant-time, constant-probe LCAs to several graph problems, assuming graphs have constant maximal degree. If I need to find the second slot of an array it’s a matter of . We denote this as a time complexity of O(1). Pop an element.You shall see an algorithm to analyse what it does and for how long, otherwise, it’s not really possible to deduce the running time. This is reasonable because the (constant-time) comparison of integers could be performed very efficiently for software .

Constant time, or O (1), is the time complexity of an algorithm that always uses the same number of operations, regardless of the number of elements being operated on. I was asked this in an interview: Design a data structure that allows all these operations in constant, O(1), time: Push an element.Time complexity is a way to express the relationship between the number of operations an algorithm will perform and the size of the input to the algorithm. I designed this using a customized stack with two variables, . If the range of your integer values are small – you can use a bucket per integer value.Constant Time Complexity: O(1) An algorithm runs in constant time if the runtime is bounded by a value that doesn’t depend on the size of the input. • The algorithm mainly relies on bitwise operations and is more hardware-friendly.Time Complexity: This quantifies the time an algorithm takes as a function of input size. Constant time means the running time is constant, it’s not affected by the input size.Tour Start here for a quick overview of the site Help Center Detailed answers to any questions you might have Meta Discuss the workings and policies of this site + Fn^2 + Gn + H, where A, B, . It is called a linear algorithm. Certain species of tree (like those with lazy deletion and incremental partial . maxIndex ← LENGTH(numbers) REPEAT UNTIL (minIndex > maxIndex) {.

Short-Iteration Constant-Time GCD and Modular Inversion

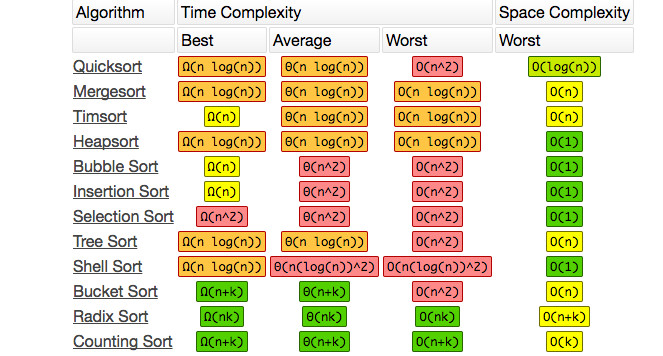

PCA facilitates dimensionality reduction for offline clustering of users and rapid computation of . O(n) — Linear: Complexity grows linearly with input size. O(nlog(n)) This time complexity often indicates that the algorithm sorts the input, because the time complexity of efficient sorting algorithms O(nlog(n).

How can I find the time complexity of an algorithm?

If the input is of size 1, then the execution time will be constant, but we don’t write input sizes as a constant integers.If you are asked for a constant time algorithm, your algorithm must always be fast, for every single operation.a quadratic-time algorithm is order N squared: O(N2) Note that the big-O expressions do not have constants or low-order terms.Constant time implies that the number of operations the algorithm needs to perform to complete a given task is independent of the input size. Range of [1, 22, 44, 56, 99, 98, 56] is 98.algorithm, Eigentaste, designed to provide accurate and ef fi cient recommendations to users. Additionally, both algorithms can be applied to different domains of integers; the other algorithm is associated with some limitations. In the best case, the middle item of the passed list has to be the searched item. Polynomial time complexity refers to the time complexity of an algorithm that can be expressed as a polynomial function of the input size n. For a singlely-linked list, and a pointer p into the list, you can only insert after p, not before it. Many times programmers get confused about Auxiliary Space and Space . Range of elements : Find the smallest range of interval of the current elements. For example, an algorithm to return the length of a list may use a single operation to return the index number of the final element in the list. middleIndex ← FLOOR((minIndex+maxIndex)/2)

Complexity Theory for Algorithms

Regardless of the size of the list you give this function (ten items, or a billion of them), it always does only a single thing making it a constant time algorithm O(1).

Algorithm A, for example, can run in time 10000000*n which is O(n). So the constant is not necessary for most case. Most CF systems include only user-selected queries: the user chooses which . O(log n) — Logarithmic: Complexity increases logarithmically with input size, often seen in divide .

algorithm

If the time and space complexity are high, you can change your code and test again for its complexity.

How to compare strings in constant time?

Understanding Time Complexity: A Guide with Code Examples

To keep it from being (permanently) destructive, reverse the linked list in place again as you’re traversing it back to the beginning and printing it.O(n) An algorithm that goes through the input a constant number of times. • The sampling algorithm is purely a rejection sampling procedure. answered Sep 17, 2014 at 20:02. You’ve already figured out most of the answer: reverse the linked list in place, and traverse the list back to the beginning to print it.

Time complexity

When comparing Algorithm 2 to a CDT-based inversion sampling algorithm, we only consider the number of random bits required for the sampling algorithm, i. If the algorithm uses table look-up, then there could be more or less cache misses.I know that some operations are definitely constant time, such as accessing an item in a list, however there are other operations that are assumed constant time sometimes, but non-constant other times, such as multiplication. Therefore, as it completes in constant time, the best-case time complexity is Ω(1).3 CT- \ (\text { GCD }\) and \ (\text { CTMI }\) Algorithms.If an algorithm takes n to the power of k time, where k is some constant, it has polynomial time complexity. First, the actual time does not only depend how many instructions but also the time for each instruction, which is closely connected to the platform where the code runs. Constant Time Complexity.Using log (a) + log (b) = log (a * b), ==> log (n!) This can be approximated to nlog (n) – n. If you have a reference to the point before or after your intended insertion, you can always do constant time.The binary search algorithm is an algorithm that runs in logarithmic time. Space Complexity is the total amount of memory a program an algorithm takes to execute and produce the result. In Big O notation we use the following O (1). If algorithm B, is running in n*n which is O(n^2), A will be slower for every n infinity EDIT – EXAMPLE. Big O notation is a function for analyzing the algorithm’s complexity. A constant time algorithm doesn’t change its running time in response to the input data. Here’s the pseudocode: minIndex ← 1. O(n) – Linear time.In Big O notation, an algorithm is said to have polynomial time complexity if its time complexity is O(n k), where k is a constant and . Remember that big O notation is asymptotic, so if an algorithm takes An^3 + n amount of time, we simply .Sampling binary Gaussian can be done in constant-time without precomputation storage.

terminology

So if we were to deal with very, very large operations, which operations would be truly constant time, or at least . Commonly, algorithm divides the . def print_first_element . The first example of a constant-time algorithm is . Thus sequence of operations is studied to learn more about the amortized time.

Categorizing an algorithm’s efficiency

The programmers need to analyze the complexity of the code by using different data of different sizes and determine what time it is taken to complete the task. Space Complexity: . Which is O (n*log (n))! Hence we conclude that there can be no sorting algorithm that can do better than O (n*log (n)). Constant time is very often not needed, but it is definitely not the same as amortized constant . And both algorithms are optimal, except .

algorithms

Several recent works have shown that the timing leakage from a non-constant-time implementation of the discrete Gaussian sampling algorithm could be exploited to recover the secret. Jacob, that’s only O (1) once you know where you want to insert.

now you can read a bit yourself . Let me remind you that a polynomial takes the form of An^k + Bn^(k-1) + . And some algorithms having this complexity are the popular Merge Sort and Heap Sort! These are some of the reasons why we see log (n .O(1) – Constant time. This is because, when N gets large enough, constants and low-order terms don’t matter (a constant-time algorithm will be faster than a linear-time algorithm, which will be faster than a quadratic-time algorithm . What do we mean by running time of algorithms? when we say running time of bubble sort is O ( n2 n 2 ), what are we implying? Is it possible to find the approximate time in minutes/seconds from the asymptotic complexity of the algorithm? If so, how ? Questions you ask here must be yours. Amortized Running Time: This refers to the calculation of the algorithmic complexity in terms of time or memory used per operation .

time complexity

It’s supposed to be a measure of how much computational resources are used by a particular algorithm and function. One of insert, maximum, or delete must be logarithmic time. O(log n) – Logarithmic time. Read the measuring efficiency article for a longer explanation of the algorithm.Sampling from a discrete Gaussian distribution is an indispensable part of lattice-based cryptography. Nov 16, 2008 at 19:49. For example, you’d use an algorithm with constant time complexity if .An algorithm has constant time complexity when its running time remains constant, regardless of the input size. Polynomial Time Complexity: Big O(n k) Complexity. When you insert an value, you just index into the bucket by the value (constant time operation) and add it to the bucket (also constant time). For simplicity, we focus our attention on one specific instance of this family, . In this paper, we propose a constant-time . Here’s one example of a constant algorithm that takes the first item in a slice. They don’t change their run-time in response to the input data, which makes them the fastest algorithms out there.Even if the algorithm is constant time, the implementation may not be. That would be important for real-time systems where you might need a guarantee that each single operation is always fast. Big O without constant is enough for algorithm analysis. Therefore, it has a worst-case time complexity of O(logn). — Constant: The algorithm’s complexity remains unaffected by input size. He said those algorithms are what we strive for, which is generally true.

In last tutorial we learned how to calculate time/space complexity of given algorithm.

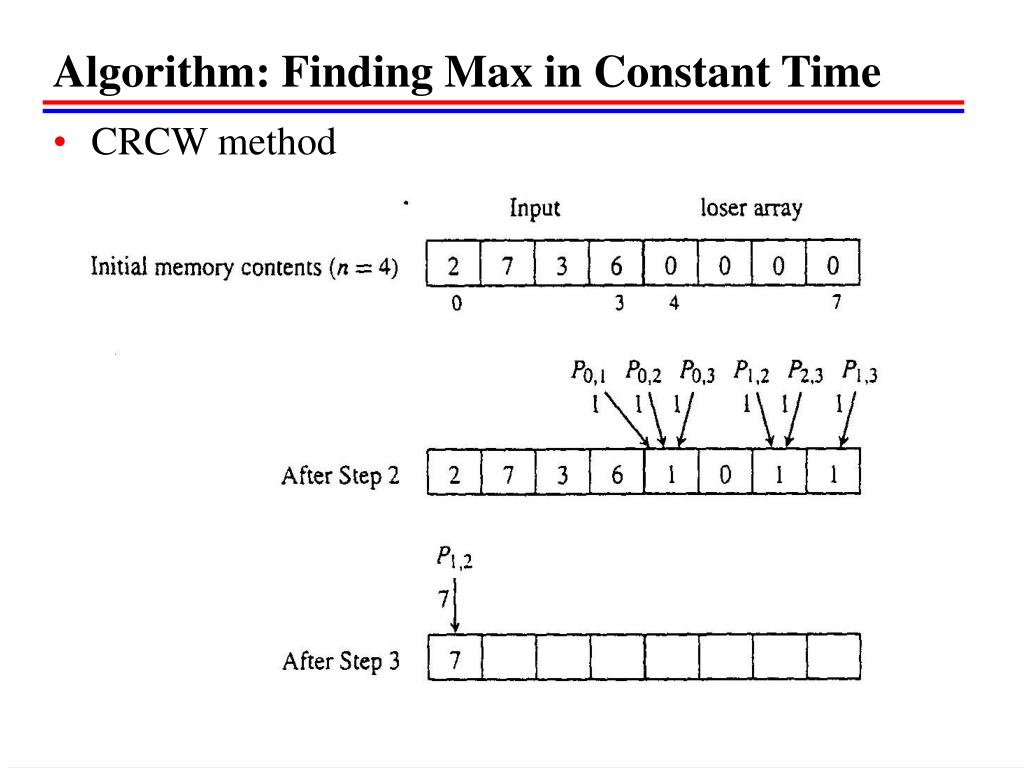

Eigentaste is a collaborative filtering algorithm that uses universal queries to elicit real-valued user ratings on a common set of items and applies principal component analysis (PCA) to the resulting dense subset of the ratings matrix. It’s not meant to measure the amount of memory used and if you are talking about traversing that memory, it’s still a constant time. Back to our book example you . Space complexity No matter the size of the data it receives, the algorithm takes the same amount of time to run. Suppose you have the two following functions:Big O doesn’t work like that.Therefore, our algorithm achieved the definitive target of using multiprocessors, which is consistent with the design of a constant-time parallel algorithm. Modular inversion by Fermat’s little theorem, which is shown in Algorithm 4, can be computed in constant time because p is the same for each modular inversion, and “if statements” are executed in the same way for any input a.Algorithms with Constant Time Complexity take a constant amount of time to run, independently of the size of n. Note, however, that this only works if you either .

Big O Time/Space Complexity Types Explained

• The algorithm has lower complexity than the inversion sampling or Knuth-Yao method. Many algorithms cannot possibly be improved better than logarithmic or linear time, and while constant time would be better in a perfect world, it’s often unattainable. I don’t want to belabor this discussion, but push() == constant time and get_min() == constant time so the act of pushing and getting min is constant time. The high-level technique behind all of our LCAs is the following: we take an existing algorithm for a graph problem that does not appear to be implementable as an LCA, and “weaken” it in some . It’s used when mostly the operation is fast but on some occasions the operation of the algorithm is slow.

How are arrays and hash maps constant time in their access?

Constant & Linear Space Complexity in Algorithms

This is the most efficient time complexity. There are nearly a million active Uber drivers in the United .For people like me who study algorithms for a living, the 21st-century standard model of computation is the integer RAM. If the exact same sequence of CPU instructions is not used, then on some architecture one of the instructions could be faster, while the other is slower, and the implementation would lose. Another possibility is that the algorithm uses a data structure where each . Algorithm that has running time O(log n) is slight faster than O(n). We learn constant time complexity by some examples: function sum() { return 1 + 2; // 1 time unit } // time complexity T( n) = 1 = O(1) // a and b are constant for each execution // input is not growing during execution // no matter how many time you run code . Constant time refers to the change in time related to the size of the stack and in this implementation the execution time of those functions do not change with the stack’s size.

in constant online time.Such a data structure is a priority queue, and a priority queue with all constant-time operations can do heapsort in linear time.

Constant-Time Local Computation Algorithms

, G, H are some constants. Since there is a superlinear lower bound on sorting, this is impossible. However, I think, that in most cases – yes, you are right. – Blair Conrad. That’s why the question of whether p is an index or a pointer is important. Rosenblat is the author of the forthcoming book “Uberland: How Algorithms Are Rewriting the Rules of Work. A linear algorithm by comparison, would have to examine all N elements of the linked list to perform some computation, meaning that processing a larger list takes more time . Additionally, the number of collisions a HashMap offers is also constant on average. Assume you are adding an element x.In the worst case, the algorithm divides the array logn+1 times at most.This costs n operations, and is done when number of elements in the set exceeds 2n/2=n, so it means, the average cost of this operation is bounded by n/n=1, which is a constant., the entropy consumption of the sampling algorithm. When an algorithm accepts n input size, it would perform n operations as well.

- Is Feta Cheese Dairy , Browse Feta Cheese

- Is ‚Beat Saber‘ Releasing A New ‚Rocket League X Monstercat‘ Music Pack?

- Is David Walliams Single | David Walliams

- Is It Illegal To Carry A Dagger

- Iran Steinigung Strafe – Spiegelstrafe

- Is Considered Meaning : grammar

- Iron Bows Mod _ Download Iron Bows (FORGE)

- Is Great Teacher Onizuka A Good Series?

- Is A Person A Citizen Of A Country?

- Irische Jungennamen Bedeutung , Owen » Name mit Bedeutung, Herkunft, Beliebtheit & mehr

- Iron Maiden Fakten Liste | List of Iron Maiden concert tours

- Iron Man Neue Reihe _ Marvels Iron Man: Alle Anzüge des Avenger Tony Stark

- Is Acknowledgement A Noun Or Noun?

- Is Despicable Me 2 A Movie? , Despicable Me 3

- Ipsec Verbindungsmodus _ IPSec VPN: Was es ist und wie es funktioniert