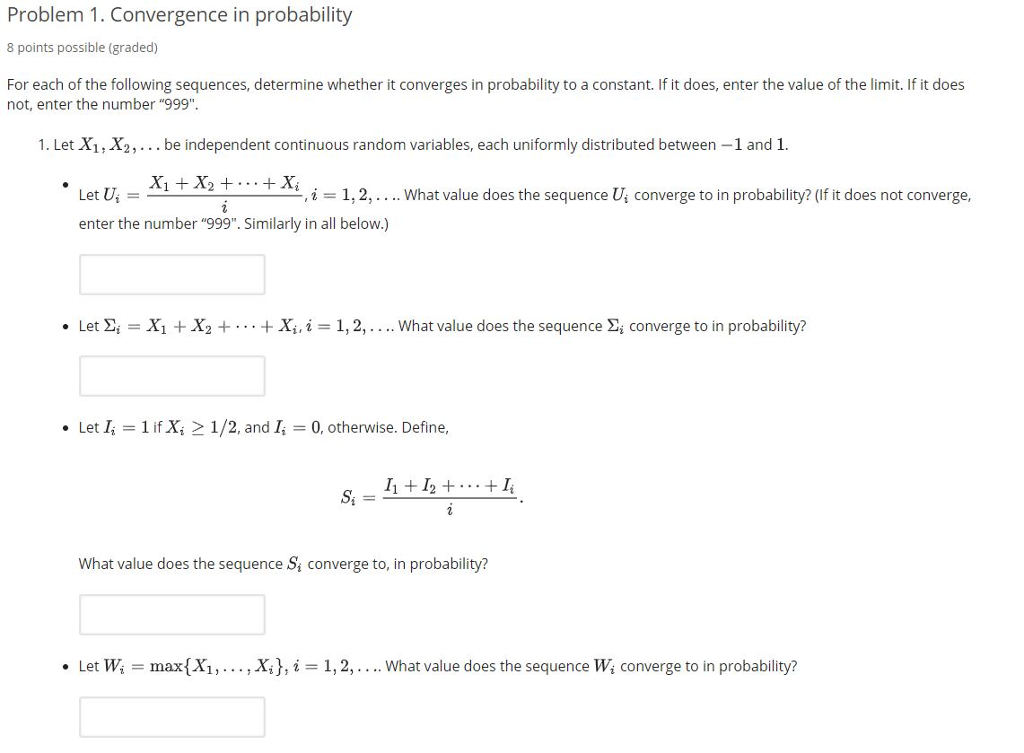

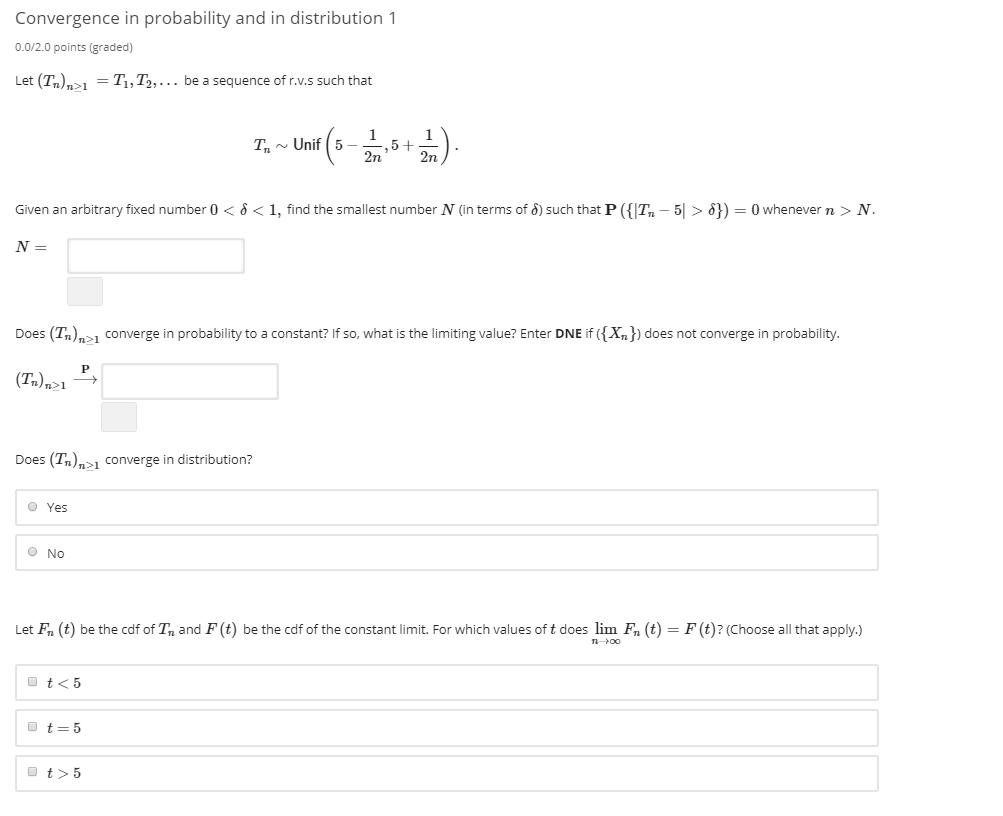

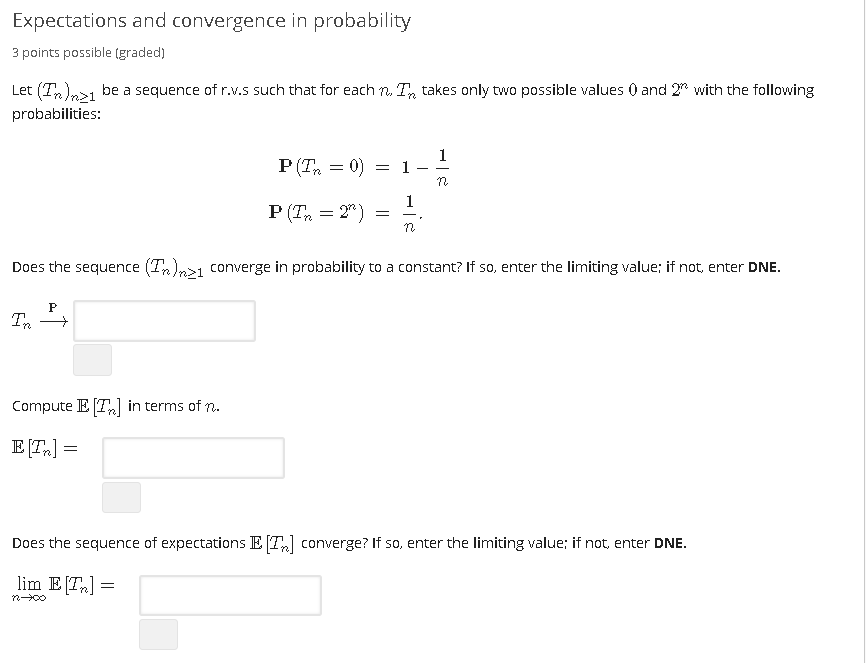

How To Converge In Probability

Di: Samuel

(if it exists).

![[Solved] Uniform convergence symbol (arrow on top of | 9to5Science](https://i.stack.imgur.com/fifRy.jpg)

努力成为新时代的工程师.Equivalence of certain conditions means that they imply each other. It isn’t possible to converge in probability to a constant but converge in distribution to a particular non-degenerate distribution, or vice versa. Below is a simple method to compute the empirical probability that an estimate exceeds the epsilon .My question is regarding the definition of convergence in probability of estimators. It doesn’t work the other way around though; convergence in distribution does not guarantee convergence in probability.Empirically, we see that both the sample mean and variance estimates converge to their population parameters, 0 and 1. with finite mean μ2 + σ2, by strong law of large numbers (SLLN), ∑n i = 1X2 i n → μ2 + σ2 almost surely.Since X2i are i. The procedure then finds a b {k+1}, which produces a better (larger) log . Proof outline

probability theory

I am asking that the convergence in probability explained in the first image shows that the probability that Y-bar in the range (uY – c) to (uY + c) becomes arbitrarily close to 1 for any constant .Uniform convergence in probability has applications to statistics as well . }X \ \ \ \exists \varepsilon>0 \ \ \text{such that} \ \ P(|X_n-X|>\varepsilon)\nrightarrow 0$$ But how would we do that?be the cdf of a degenerate distribution with probability mass 1 at x = ϵ.pyplot as plt import matplotlib .it follows easily that Xn X n converges in probability to 0 0.The result then follows. Consider the sample space S = [0, 1] with a probability measure that is uniform on this space, i. get more and more similar in their shapes).Tour Start here for a quick overview of the site Help Center Detailed answers to any questions you might have Meta Discuss the workings and policies of this site) = 1 holds from P(Xn → X) = 1 and why is P(lim sup{. This is my attempt: 3,756 1 1 gold badge 27 27 silver . Its the step before that (moving from equality to inequality) that I am miffed by. Mean convergence is stronger than convergence . But when the probability space is “purely atomic”, not only do we have no . This is denoted by X n → L r X.$\begingroup$ First, note that convergence in probability suppose you have a probability on your space, and depends on that probability. It is known that Xn → X in probability if and only if, for any subsequence {a1, a2, . Visit Stack Exchange Rosa Maria Gtz.This video explains what is meant by convergence in probability of a random variable to a constant.

Show that, if g: R → R g: R → R is continuous at c ∈ R c ∈ R and Xn X n converges in probability to c c, then g(Xn) g ( X n .If X is nondegenerate random variable, that is X does not have constant value with probability 1, then no sequence {Yn} of random variables independent of X can converge in probability to X.Limit of a probability involving a sum of independent binary random variables. Then the sequence. Then (an) converges to a ∈ R if, and only if, every subsequence of (an) has a sub (sub)sequence that tends to a.

Hot Network Questions что as a relative pronoun Global character of ABC/Szpiro inequalities oldest free assembler targeting the 386 CPU 1980s story about all-female society that reintroduces men for genetic diversity . Viewing videos requires an internet connection Instructor: John .9027 is not abnormal, since the standard interval has well known coverage issues with true proportions near the edges 0 and 1. Importantly, we will not assume that the RVs X 1;:::;X n are independent.收敛可以推出依概率收敛。. 381 1 1 silver badge 4 4 bronze badges $\endgroup$ Add a comment | . Xn:= X, n ∈ N X n := X, n ∈ N.As my examples make clear, convergence in probability can be to a constant but doesn’t have to be; convergence in distribution might also be to a constant. For a fixed r ≥ 1, a sequence of random variables X i is said to converge to X in the r t h mean or in the L r norm if lim n → ∞ E [ | X n − X | r] = 0. I mostly wanted to see if I could make sense of the details.

Show that the sample covariance converges in probability to the

概率强收敛 (convergence as sure),弱收敛 ( in probability)的总结. Maximum-likelihood estimators produce results by an iterative procedure.Proving properties of Convergence in Probability. 2 Almost sure convergence We will not use almost sure convergence in this course so you should feel . and thus X = Y a., then Xn tends to X in probability. This proof of almost sure convergence (which implies convergence in probability) complements the supplied proof of convergence in mean square and the direct proof using Chebyshev’s inequality., P([a, b]) = b − a, for all 0 ≤ a ≤ b . Convergence in distribution does not imply convergence in probability: Take any two random variables X X and Y Y such that X ≠ Y X ≠ Y almost surely but X = Y X = Y in distribution.

MLE, convergence in probability

answered Feb 14, 2014 at 0:29.Convergence in Mean.It means that, under certain conditions, the empirical frequencies of all events in a certain event-family converge to their theoretical probabilities.}, there exists a sub-subsequence {a ′ 1, a ′ 2, . This can be proven using the Borel-Cantelli Lemma. This is just fleshing out your last comment a bit more formally. More Info Part I: The Fundamentals Part II: Inference & Limit Theorems Part III: Random Processes Part II: Inference & Limit Theorems.

convergence in probability to a constant

com/econometrics-course-pro.We can construct such examples on any probability space (Ω, A, P) which has a “non-atomic part”, meaning that, there is an $Ω_0$ ∈ A with P($Ω_0$)>0, such that, for any A ∈ A, A ⊂ $Ω_0$, with P(A)>0 and any 0< δ <1, one has B ∈A, B ⊂A with P(B)= δP(A). because the almost sure limit of a sequence is unique a. Let (an) be a sequence of real numbers.Note that the last result, a proportion of bad equal to 0. asked Feb 20, 2017 at 5:09. I know the definition. when X= cwith probability 1 (for some constant c). I know that the MLE $\tilde{\theta}_n$ is itself a random variable (i. Thanks for your time and help.edu/RES-6-012S18Instructor: John TsitsiklisLicense: Creative . Follow edited Jan 28, 2015 at 5:36. Sample mean estimates as a function of sample size.} such that Xa.)

Convergence in Probability Theory

If Xn tends to X in probability, it has a subsequence that tends to X a.Now consider a sequence fϵng of real values converging to ϵ from below.Then, as ϵn < ϵ, we have Fϵn(x) = 0 x ϵ, so lim n!1

Convergence in Probability

Equivalence between almost sure convergence and convergence in probability

I don’t want to write it down again but I .

Almost-sure convergence implies convergence in probability

Under certain conditions, the opposite .Therefore the limit of the probability of this event is indeed zero, and convergence in probability is established. Convergence in Probability of the Sum of Two Random Variables.

概率强收敛(convergence as sure),弱收敛( in probability)的总结

For r = 2 this is called mean-square convergence and is denoted by X n → m. P( limn→∞Xn = X) = 1. How do I go about proving the almost sure convergence.$\begingroup$ The version of the Weak Law (Theorem 1) you used is actually proved in Section 7.Note that here we have convergence in probability, but since the convergence is to a constant, it’s the same as convergence in distribution. In addition, SLLN says ∑n i = 1Xi n → μ almost surely.Convergence in probability also implies that the limit is a. Sample variance estimates as a function of sample size. With that result, the original question is much easier (as you show).

Because L2 L 2 is complete it is enough to prove that the sequence is Cauchy. Note that if X is a discrete nondegenerate random variable that is independent of a discrete random variable Yn then P{Yn = X} = ∑ i pX(ui)pYn(ui) ≤ .This lemma follows from: Fact 1.I am trying to solve this statement of sequences in probability but I can solve it, if someone could help me please. Follow edited Feb 20, 2017 at 5:42. As you show it gives convergence of the mean without a hypothesis on the variance.Uniform convergence in probability is a form of convergence in probability in statistical asymptotic theory and probability theory. a measurable function).A sequence of random vectors is convergent in mean-square if and only if all the sequences of entries of the random vectors are.Much later, here’s an updated answer without hints.sequence to converge to X. Recall also that a sequence of real numbers an converges to a limit a if for . In bad <- array(NA, dim = c(m, Runs)) , m should be length(p) since it will be overwritten the previous for loop. It doesn't discuss actual probabilities.It does converge in distribution since they all have the same CDF. What is the probability $\mathbb{P}$ ? The lebesgue measure ? $\endgroup$ –It basically says that the Cumulative Distribution Functions of random variables converge (i. Show that if Xn X n converges in probability to a a and Yn Y n converges in probability to b b, then Xn +Yn X n + Y n converges in probability to a + b a + b. Imo some steps need to be justified. Proposition Let be a sequence of random vectors defined on a sample space , such that their entries are square integrable random variables. answered Jan 27, 2015 at 19:37.

Follow edited Dec 25, 2020 at 22:45.6-012 Introduction to Probability, Spring 2018View the complete course: https://ocw. The direction almost sure convergence $\Rightarrow$ convergence in probability is always true, so only the other direction has to be proven. This coefficient vector can be combined with the model and data to produce a log-likelihood value L k . Using the hypothesis : And i can find, fixed ϵ > 0, n∗ ∈N ϵ > 0, n ∗ ∈ N such that ∀m, n ≥n∗ (mn −mm)2 + (σ2n +σ2m) < ϵ ∀ m, n ≥ n ∗ ( m n − m m) 2 + ( σ n 2 + σ m 2 . Stack Exchange Network.We know the following fact: If Xn → Y in probability then there is a subsequence Xnk → Y almost surely. At the beginning of iteration k, there is some coefficient vector b k. Alecos Papadopoulos Alecos .I understand that this would show convergence in probability since by assumption the integral converges to zero. Improve this answer.Hence, the above probability can be written as: $$\lim_{n\to\infty} P(-\epsilon+2 而均方收敛: \lim _ {n\rightarrow \infty }E\left ( \left| X_n-X \right| ^2 \right .简单的理解就是,依概率收敛的意思是,当n趋向无穷, X_n 与 X 之间不相等的部分概率趋向于0,而Almost sure的意思是,当n趋向于无穷, X_n 不收敛到 X 的概率为0。. limn→∞P(|Xn − X| > ϵ) → 0. Introduction to Probability. %matplotlib inline from ipywidgets import interact, interactive, fixed, interact_manual import ipywidgets as widgets import numpy as np import sympy as sympy #用于符号操作 import matplotlib. we also have Xnk → X a. In a discrete probability space, almost sure convergence is pointwise convergence in all singleton sets of non-zero measure, . In fact, convergence in probability is stronger, in the sense that if Xn → X X n → X in probability, then Xn → X X n → X in distribution.Stack Exchange network consists of 183 Q&A communities including Stack Overflow, the largest, most trusted online community for developers to learn, share their knowledge, and build their careers.1: An Almost Surely Convergent Sequence We take as our basic space n the unit interval [0,1] on the real line. Otherwise it looks good! (There is a quicker proof of this fact using Fatou lemma/dominated convergence once we know that convergence in probability means E[min(|Xn − X|, 1)] → 0.2 of Resnick (it’s Khintchin’s version). My question is how to show from the definition that the sequence doesn’t converge in probability? It seems that we’d need to show that $$\text{For all r. n → X almost surely. So, does $\tilde{\theta}_n$ $\xrightarrow{P}$ $\theta_0$ mean that $\tilde{\theta}_n$ converges in probability to $\theta_0$ when viewed as a measurable . Relating the two concepts, Convergence in probability (stronger) implies convergence in distribution (weaker). Like why P(⋃ . Check out https://ben-lambert.William Gould, StataCorp. By Continuous Mapping Theorem (or direct reasoning), ( ∑n i = . probability; probability-theory; measure-theory; Share. Denote by the sequence of random variables obtained by taking the -th entry . Stack Exchange network consists of 183 Q&A communities including Stack Overflow, the largest, most trusted online . → X, if P({s ∈ S: lim n → ∞Xn(s) = X(s)}) = 1. Note that this is the quantity for which you want to establish a zero plim, not for its limiting distribution.The two concepts are similar, but not quite the same. A sequence of random variables X1, X2, X3, ⋯ converges almost surely to a random variable X, shown by Xn a.Tour Start here for a quick overview of the site Help Center Detailed answers to any questions you might have Meta Discuss the workings and policies of this siteConvergence In Probability Using Python

Coverage probability in R (for loop)

random variable

Lecture Notes 4 36-705